Record Traffic and Store in Google Cloud Storage (GCS)

We've recently introduced Google Cloud Storage (GCS) as a storage option for traffic recording in Kubeshark. This integration is pivotal, especially in Kubernetes, due to its highly distributed architecture and reliance on network-based API calls for business logic execution.

Why Is It Important

Deep network observability, facilitated by Kubeshark, is essential for pinpointing production incidents, bugs, and performance issues. It enables comprehensive tracing of business-logic transactions over the network. Real-time capability is beneficial, but sometimes the issues are not immediately apparent.

Traffic recording in Kubeshark addresses this challenge, allowing for pattern setting and using a dashboard with advanced filtering to investigate issues offline, enhancing diagnostic capabilities within Kubernetes.

How It's Done

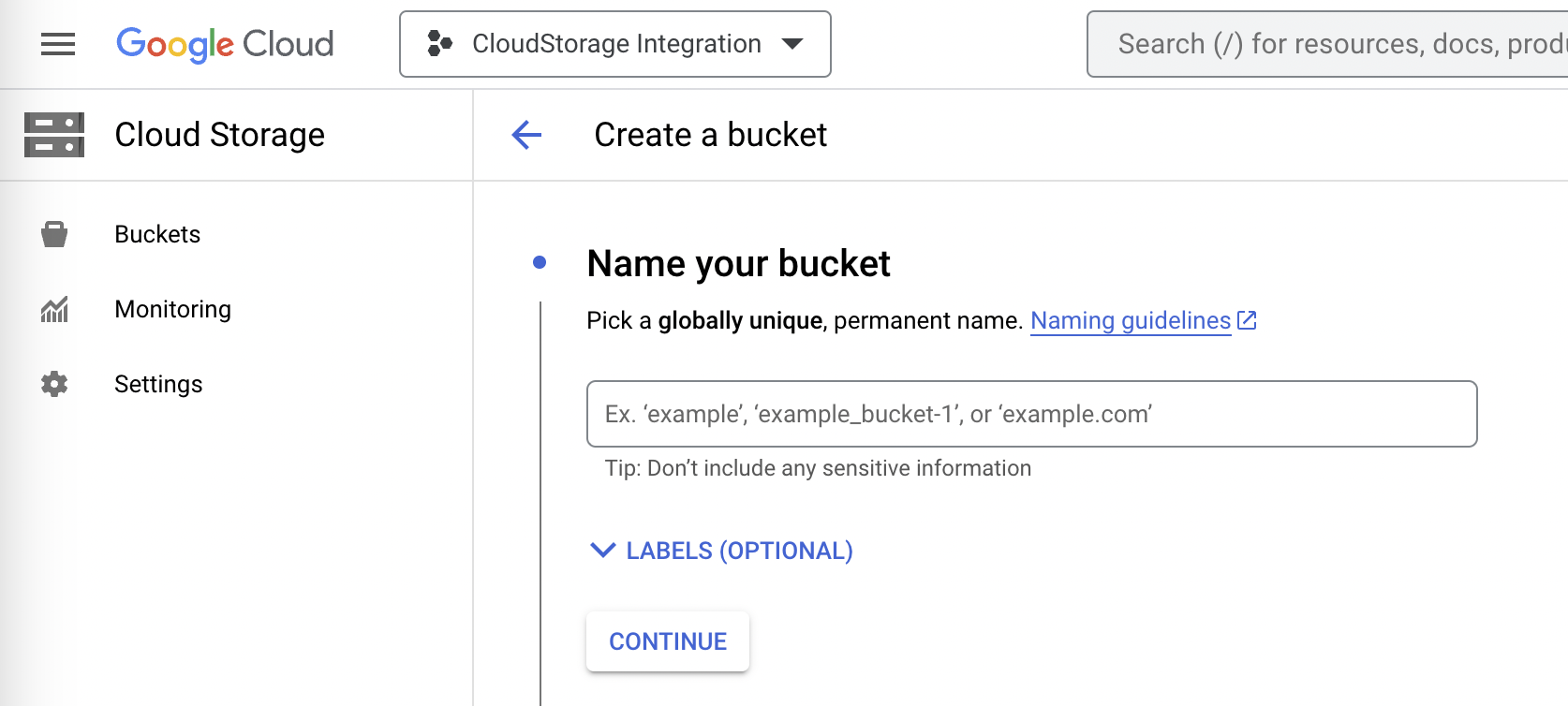

1. Assign a GCS Bucket

First, assign a GCS bucket for storing recorded traffic.

2. Create a (or Use and Existing) Service Account

Provide a service-account key as part of the Kubeshark configuration.

Download this key for the next steps.

3. Traffic Recording Script

Utilize Kubeshark's scripting to enhance functionality. Scripts can be tailored for specific needs. Download and customize the script from the provided GitHub repository.

4. Traffic Recording Pattern

Set a recording pattern in the RECORDING_KFL environment variable using KFL filter. This pattern filters and temporarily stores relevant L4 streams. Here are a few examples for KFL filters:

- http or dns - Filter L4 streams that include either HTTP or DNS traffic

- src.namespace=="my-namespace" or dst.namespace=="my-namespace" - Filter L4 streams that include namespace specific egress and ingress traffic

- src.name==r"cata.*" - Use a regular expression to filter L4 streams with traffic that belong to pods where the pod name matches the regular expression.

5. Kubeshark Configuration

The configuration includes the GCS Bucket, service account key, and script folder location.

6. Start Recording

Simply run Kubeshark to start recording. Active dashboard or Kubernetes proxy is not required.

7. Deactivating Recording

Remove the RECORDING_KFL variable from the configuration file to stop recording.

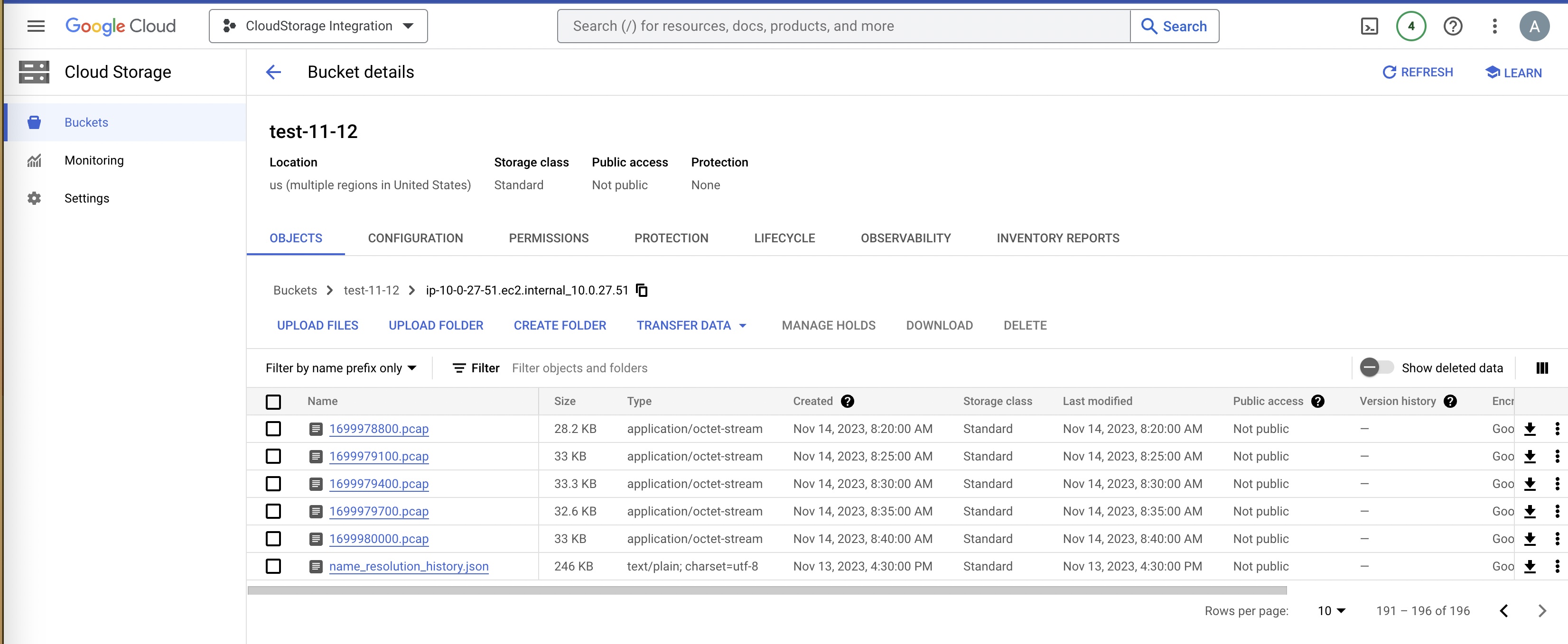

8. Viewing the PCAP files

Access and download PCAP files from the GCS console as needed.

9. Analyzing the Traffic Offline

Functionality is deprecated

Point Kubeshark's dashboard to the GCS bucket for offline analysis. Kubeshark CLI in Docker mode can be used for local viewing of the files.

Remember to have gsutil installed and configured for GCS bucket access.

TL;DR - the Fine Details

Script Description

L7 Hook

This code is triggered every time a new request-response pair is successfully dissected. Each such pair is part of an L4 stream that is stored in a local PCAP file. The L4 stream PCAP file has a very short TTL (e.g. 10 seconds), enough for a script to copy to an immutable folder..This code is responsible to copying the L4 stream to an immutable folder.

> Read more about this hook in the Hooks section.

Periodic Upload to GCS

L4 streams matching the KFL filter, that are copied to a temporary folder, are periodically uploaded to a GCS bucket.